티스토리 뷰

들어가기 앞서

본 게시글의 가장 큰 취지는 쿠버네티스를 배우고자 하시는 분들이라면 한번 쯤 겪으셨을 복잡스런 설치 과정을 보다 용이하게 자동화 설치해본다는데 있으며, 향후 쿠버네티스 학습을 위한 테스트 환경을 Minikube 수준에서 한 단계 더 나아가 다중 노드로 직접 구성해 본다는 데 또 다른 의미가 있을 것입니다.

본 환경은 학습을 위한 테스트 용도임을 강조드리며 Azure계정을 사전에 준비해 주시고, 효과적인 학습을 위해 가급적 본 실습을 진행 전 또는 진행 후 공식가이드 등을 통한 설치과정을 직접 체험해 보실 것을 권장드립니다.

[20210926 업데이트 공지]

- Flannel API 버전 오류 정정

- 검증용 설치 문서 작성 : 클러스터 설치까지만 검증 (대시보드 생성 부분부터는 생략)

20210926 [검증용] Azure와 Ansible을 활용한 쿠버네티스 클러스터 한방에 구성하기

본 게시글을 따라 클러스터를 구성해 보시려는 분들의 경우, 최근 MS의 최영락님께서 직접 검증, 정리 및 게시해주신 http://youtube.com/watch?v=hyIoPMXri-s 영상을 참고하시면 좋을 것 같습니다.

더불어, 쿠버네티스 핸즈온 실습 등을 위한 환경 구성을 목적으로 하시는 경우, 최근에 제로빅 블로그에 게시한 Vagrant를 사용하는 Containerd 런타임을 가지는 Kubernetes을 활용하시는 것도 좋을 듯 합니다.

1. Azure에 클러스터 구성을 위한 VM 노드 생성

생성하고자 하는 노드와 역할은 다음과 같다. (호스트네임 및 배포 환경은 각자 상황에 맞게 변경 가능)

| 구분 | 호스트네임 | 역할 | 비고 |

| 1 | k8s-master-az | Kubernetes Master 노드 | |

| 2 | k8s-worker01-az k8s-worker02-az |

Kubernetes Worker 노드 | |

| 3 | zero-vmc-az | Ansible 제어 노드 및 작업자용 노드 |

Azure에서 위 노드들을 생성한다.

먼저 다음과 같이 Master용 VM을 생성한다.

Resource group : 원하는 이름으로 Create new

리전 : Korea Central

이미지 : CentOS-based 7.5

Size : Defualt (D2s v3)

Username : 원하는 이름으로 생성

Public inbound ports : Allow selected ports 체크

Selected inbound ports : 원하는 포트 체크 (80, 443, 22,3389 등)

위와 같이 선택을 한 후 "Review + create"를 클릭하여 검증이 패스되면 "Create"를 클릭한다.

2. Ansible 배포를 위한 사전 작업환경 구성

SSH를 기반으로 통신을 해야하므로 Cloud Shell 등을 이용하여 전체 서버를 대상으로 다음의 작업을 수행한다.

root 패스워드 변경과 ssh 환경 구성 파일 중 ROOT 로그인 허용을 허용하고 sshd를 재구동 해준다.

Requesting a Cloud Shell.Succeeded.

Connecting terminal...

zerobig_kim@Azure:~$ ssh zerobig@52.231.26.116

The authenticity of host '52.231.26.116 (52.231.26.116)' can't be established.

ECDSA key fingerprint is SHA256:SyMgtXlBM6zp7U3hh7xfopefhjZvqI/U8M6mM/OfAaY.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '52.231.26.116' (ECDSA) to the list of known hosts.

Password:

[zerobig@k8s-master-az ~]$

[zerobig@k8s-master-az ~]$ sudo su

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for zerobig:

[root@k8s-master-az zerobig]# passwd

Changing password for user root.

New password:

BAD PASSWORD: The password contains less than 1 digits

Retype new password:

passwd: all authentication tokens updated successfully.

[root@k8s-master-az zerobig]# vi /etc/ssh/sshd_config

<이상 생략>

#LoginGraceTime 2m

PermitRootLogin yes

#StrictModes yes

#MaxAuthTries 6

<이하 생략>

[root@k8s-master-az zerobig]# systemctl restart sshd/etc/hots 파일에 각 노드에 대한 Private IP 정보를 구성해 준다.

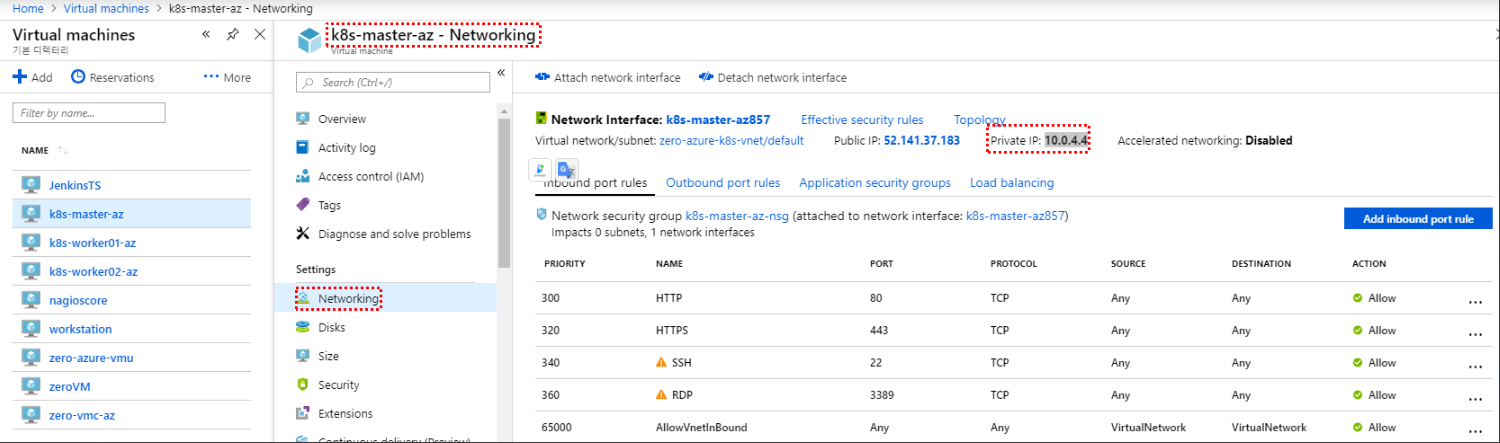

참고로 각 노드의 Private IP 정보는 "Networking" 정보에서 확인할 수 있다.

[root@k8s-master-az zerobig]# vi /etc/hosts

10.0.4.4 k8s-master-az

10.0.4.5 k8s-worker01-az

10.0.4.6 k8s-worker02-az

10.0.4.7 k8s-vmc-azzero-vmc-az를 대상으로 Ansible을 설치한다.

먼저 저장소를 추가하고 설치를 한 후 버전 확인을 통해 설치 결과를 검증한다.

Last login: Fri Apr 5 00:03:55 2019

[root@zero-vmc-az ~]# yum install epel-release -y

Loaded plugins: fastestmirror, langpacks

Determining fastest mirrors

<이하 생략>

[root@zero-vmc-az ~]# yum install ansible -y

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

Resolving Dependencies

<중략>

Installed

ansible.noarch 0:2.4.2.0-2.el7

Dependency Installed:

python-babel.noarch 0:0.9.6-8.el7 python-backports.x86_64 0:1.0-8.el7 python-backports-ssl_match_hostname.noarch 0:3.5.0.1-1.el7 python-cffi.x86_64 0:1.6.0-5.el7 python-enum34.noarch 0:1.0.4-1.el7

python-httplib2.noarch 0:0.9.2-1.el7 python-idna.noarch 0:2.4-1.el7 python-ipaddress.noarch 0:1.0.16-2.el7 python-jinja2.noarch 0:2.7.2-2.el7 python-markupsafe.x86_64 0:0.11-10.el7

python-paramiko.noarch 0:2.1.1-9.el7 python-passlib.noarch 0:1.6.5-2.el7 python-ply.noarch 0:3.4-11.el7 python-pycparser.noarch 0:2.14-1.el7 python-setuptools.noarch 0:0.9.8-7.el7

python2-cryptography.x86_64 0:1.7.2-2.el7 python2-jmespath.noarch 0:0.9.0-3.el7 sshpass.x86_64 0:1.06-2.el7

Complete!

[root@zero-vmc-az ~]# ansible --version

ansible 2.4.2.0

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Jul 13 2018, 13:06:57) [GCC 4.8.5 20150623 (Red Hat 4.8.5-28)]패스워드 없이 SSH 접속이 가능하도록 SSH 키를 생성하고

관리 대상 호스트 노드에 해당 키 값을 복사한 뒤, 패스워드 없이 로그인이 이루어 지는지 확인해본다.

[root@zero-vmc-az ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:MMXfxtcAiYB4TBeXYsbR8LNBglvyNmuQdLMqIDSFKcA root@zero-vmc-az

The key's randomart image is:

+---[RSA 2048]----+

|+ +. +.=O*oo.o |

|.E . BoX=+ . . |

|o . ooX =+o o |

|. . +o= .++ . .|

| . . +So.. . |

| . . o |

| . . |

| |

| |

+----[SHA256]-----+

[root@zero-vmc-az ~]# ssh-copy-id 10.0.4.4

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.0.4.4 (10.0.4.4)' can't be established.

ECDSA key fingerprint is SHA256:snJrznEt04DBsqSrB4+3zChNdJI4bq3Z+SUnceL1vRU.

ECDSA key fingerprint is MD5:f4:ec:20:be:28:15:20:fc:40:19:de:7a:67:af:fa:11.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '10.0.4.4'"

and check to make sure that only the key(s) you wanted were added.

[root@zero-vmc-az ~]# ssh-copy-id 10.0.4.5

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.0.4.5 (10.0.4.5)' can't be established.

ECDSA key fingerprint is SHA256:HOr4+6z2WTEr+XlDz/cTFnZuuo0q44yL0DOH/X0kxvQ.

ECDSA key fingerprint is MD5:86:f9:a0:d0:98:8f:41:dd:b3:cd:9f:8a:0c:d6:c0:14.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '10.0.4.5'"

and check to make sure that only the key(s) you wanted were added.

[root@zero-vmc-az ~]# ssh-copy-id 10.0.4.6

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '10.0.4.6 (10.0.4.6)' can't be established.

ECDSA key fingerprint is SHA256:sP1rLXgyHQBoyfQ/hI1E6BOIuaJ0Rbb5EXXmDx3YKlQ.

ECDSA key fingerprint is MD5:e0:ac:bd:f6:bd:cb:36:4b:e9:b3:0b:56:dc:0d:5b:73.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '10.0.4.6'"

and check to make sure that only the key(s) you wanted were added.

[root@zero-vmc-az ~]# ssh k8s-master-az

The authenticity of host 'k8s-master-az (10.0.4.4)' can't be established.

ECDSA key fingerprint is SHA256:snJrznEt04DBsqSrB4+3zChNdJI4bq3Z+SUnceL1vRU.

ECDSA key fingerprint is MD5:f4:ec:20:be:28:15:20:fc:40:19:de:7a:67:af:fa:11.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'k8s-master-az' (ECDSA) to the list of known hosts.

Last login: Fri Apr 5 00:42:41 2019 from k8s-vmc-az

[root@k8s-master-az ~]# exit

logout

Connection to k8s-master-az closed.

[root@zero-vmc-az ~]# ssh k8s-worker01-az

The authenticity of host 'k8s-worker01-az (10.0.4.5)' can't be established.

ECDSA key fingerprint is SHA256:HOr4+6z2WTEr+XlDz/cTFnZuuo0q44yL0DOH/X0kxvQ.

ECDSA key fingerprint is MD5:86:f9:a0:d0:98:8f:41:dd:b3:cd:9f:8a:0c:d6:c0:14.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'k8s-worker01-az' (ECDSA) to the list of known hosts.

Last login: Fri Apr 5 00:42:59 2019 from k8s-vmc-az

[root@k8s-worker01-az ~]# exit

logout

Connection to k8s-worker01-az closed.

[root@zero-vmc-az ~]# ssh k8s-worker02-az

The authenticity of host 'k8s-worker02-az (10.0.4.6)' can't be established.

ECDSA key fingerprint is SHA256:sP1rLXgyHQBoyfQ/hI1E6BOIuaJ0Rbb5EXXmDx3YKlQ.

ECDSA key fingerprint is MD5:e0:ac:bd:f6:bd:cb:36:4b:e9:b3:0b:56:dc:0d:5b:73.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'k8s-worker02-az' (ECDSA) to the list of known hosts.

Last login: Fri Apr 5 00:12:08 2019

[root@k8s-worker02-az ~]# exit

logout

Connection to k8s-worker02-az closed.인벤토리 hosts 파일을 다음과 같이 생성하고, ping 명령을 통해 연결 상태를 확인해 본다.

[root@zero-vmc-az ~]# vi hosts

master ansible_host=10.0.4.4 ansible_user=root

[workers]

worker1 ansible_host=10.0.4.5 ansible_user=root

worker2 ansible_host=10.0.4.6 ansible_user=root

[root@zero-vmc-az ~]# ansible -m ping all -i hosts

worker2 | SUCCESS => {

"changed": false,

"ping": "pong"

}

master | SUCCESS => {

"changed": false,

"ping": "pong"

}

worker1 | SUCCESS => {

"changed": false,

"ping": "pong"

}SUCCESS, "pong" 값을 리턴 받으면 정상이다.

몸풀기를 위해 몇 가지 간단한 명령을 전달해 원하는 응답을 잘 주는지 확인해 본다.

[root@zero-vmc-az ~]# ansible -m command -a "date" all -i hosts

worker1 | SUCCESS | rc=0 >>

Fri Apr 5 01:08:42 UTC 2019

master | SUCCESS | rc=0 >>

Fri Apr 5 01:08:42 UTC 2019

worker2 | SUCCESS | rc=0 >>

Fri Apr 5 01:08:42 UTC 2019

[root@zero-vmc-az ~]# ansible -m command -a "uname -a" all -i hosts

worker2 | SUCCESS | rc=0 >>

Linux k8s-worker02-az 3.10.0-862.11.6.el7.x86_64 #1 SMP Tue Aug 14 21:49:04 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

worker1 | SUCCESS | rc=0 >>

Linux k8s-worker01-az 3.10.0-862.11.6.el7.x86_64 #1 SMP Tue Aug 14 21:49:04 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

master | SUCCESS | rc=0 >>

Linux k8s-master-az 3.10.0-862.11.6.el7.x86_64 #1 SMP Tue Aug 14 21:49:04 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux굿이다~!!!

이제 본작업을 위한 준비가 되었다^^

3. Ansible을 통한 배포

먼저 설치 관련 소스를 다운로드 받기 위해 git을 설치가 필요하다.

[root@zero-vmc-az ~]# yum install git

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

Resolving Dependencies

<중략>

Installed:

git.x86_64 0:1.8.3.1-20.el7

Dependency Installed:

perl-Error.noarch 1:0.17020-2.el7 perl-Git.noarch 0:1.8.3.1-20.el7 perl-TermReadKey.x86_64 0:2.30-20.el7

Complete!https://github.com/zer0big/ansible-k8s-cluster.git으로부터 설치 관련 소스를 clone 하고 해당 디렉토리로 이동한 뒤, 앞서 생성해 둔 hosts 인벤토리 파일을 복사해 넣고

다운받은 소스 디렉토리 내 building-k8s-cluster.sh 파일에 실행권한을 준다.

[root@zero-vmc-az ~]# git clone https://github.com/zer0big/ansible-k8s-cluster.git

Cloning into 'ansible-k8s-cluster'...

remote: Enumerating objects: 14, done.

remote: Counting objects: 100% (14/14), done.

remote: Compressing objects: 100% (12/12), done.

remote: Total 14 (delta 2), reused 10 (delta 1), pack-reused 0

Unpacking objects: 100% (14/14), done.

[root@zero-vmc-az ~]# cd ansible-k8s-cluster/

[root@zero-vmc-az ansible-k8s-cluster]# cp ../hosts ./

[root@zero-vmc-az ansible-k8s-cluster]# chmod a+x building-k8s-cluster.sh

[root@zero-vmc-az ansible-k8s-cluster]# ls -rlht

total 20K

-rw-r--r--. 1 root root 529 Apr 5 01:10 workers.yml

-rw-r--r--. 1 root root 1013 Apr 5 01:10 master.yml

-rw-r--r--. 1 root root 1.4K Apr 5 01:10 kube-dependencies.yml

-rw-r--r--. 1 root root 154 Apr 5 01:10 hosts

-rwxr-xr-x. 1 root root 2.9K Apr 5 01:22 building-k8s-cluster.shbuilding-k8s-cluster.sh의 43라인에서 Master에 대한 호스트 네임을 자신의 상황에 맞게 수정한 뒤 쉘을 실행한다.

[root@zero-vmc-az ansible-k8s-cluster]# vi building-k8s-cluster.sh

<이상 생략>

echo -e "=================================================================================================================================="

echo -e "Copy K8S Master kube config to local"

echo -e "=================================================================================================================================="

mkdir ~/.kube

scp k8s-master-az:/etc/kubernetes/admin.conf ~/.kube/config

<이하 생략>

[root@zero-vmc-az ansible-k8s-cluster]# ./building-k8s-cluster.sh

==================================================================================================================================

Execute Ansible Playbook kube-dependencies.yml

==================================================================================================================================

PLAY [all] **********************************************************************************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************************************************************************

ok: [worker1]

ok: [master]

ok: [worker2]

TASK [install Docker] ***********************************************************************************************************************************************************************************************************************

changed: [master]

changed: [worker2]

changed: [worker1]

TASK [start Docker] *************************************************************************************************************************************************************************************************************************

changed: [worker2]

changed: [master]

changed: [worker1]

TASK [disable SELinux] **********************************************************************************************************************************************************************************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [disable SELinux on reboot] ************************************************************************************************************************************************************************************************************

[WARNING]: SELinux state change will take effect next reboot

changed: [master]

changed: [worker2]

changed: [worker1]

TASK [ensure net.bridge.bridge-nf-call-ip6tables is set to 1] *******************************************************************************************************************************************************************************

changed: [master]

changed: [worker2]

changed: [worker1]

TASK [ensure net.bridge.bridge-nf-call-iptables is set to 1] ********************************************************************************************************************************************************************************

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [add Kubernetes' YUM repository] *******************************************************************************************************************************************************************************************************

changed: [worker1]

changed: [master]

changed: [worker2]

TASK [install kubelet] **********************************************************************************************************************************************************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [install kubeadm] **********************************************************************************************************************************************************************************************************************

changed: [master]

changed: [worker1]

changed: [worker2]

TASK [start kubelet] ************************************************************************************************************************************************************************************************************************

changed: [master]

changed: [worker1]

changed: [worker2]

PLAY [master] *******************************************************************************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************************************************************************

ok: [master]

PLAY RECAP **********************************************************************************************************************************************************************************************************************************

master : ok=12 changed=10 unreachable=0 failed=0

worker1 : ok=11 changed=10 unreachable=0 failed=0

worker2 : ok=11 changed=10 unreachable=0 failed=0

==================================================================================================================================

Execute Ansible Playbook master.yml

==================================================================================================================================

PLAY [master] *******************************************************************************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************************************************************************

ok: [master]

TASK [initialize the cluster] ***************************************************************************************************************************************************************************************************************

changed: [master]

TASK [create .kube directory] ***************************************************************************************************************************************************************************************************************

changed: [master]

TASK [copy admin.conf to user's kube config] ************************************************************************************************************************************************************************************************

changed: [master]

TASK [install Pod network] ******************************************************************************************************************************************************************************************************************

changed: [master]

PLAY RECAP **********************************************************************************************************************************************************************************************************************************

master : ok=5 changed=4 unreachable=0 failed=0

==================================================================================================================================

Install kubectl to local

==================================================================================================================================

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

kubernetes/signature | 454 B 00:00:00

Retrieving key from https://packages.cloud.google.com/yum/doc/yum-key.gpg

Importing GPG key 0xA7317B0F:

Userid : "Google Cloud Packages Automatic Signing Key <gc-team@google.com>"

Fingerprint: d0bc 747f d8ca f711 7500 d6fa 3746 c208 a731 7b0f

From : https://packages.cloud.google.com/yum/doc/yum-key.gpg

Retrieving key from https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

kubernetes/signature | 1.4 kB 00:00:00 !!!

kubernetes/primary | 47 kB 00:00:00

kubernetes 336/336

Resolving Dependencies

--> Running transaction check

---> Package kubectl.x86_64 0:1.14.0-0 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

=============================================================================================================================================================================================================================================

Package Arch Version Repository Size

=============================================================================================================================================================================================================================================

Installing:

kubectl x86_64 1.14.0-0 kubernetes 9.5 M

Transaction Summary

=============================================================================================================================================================================================================================================

Install 1 Package

Total download size: 9.5 M

Installed size: 41 M

Downloading packages:

warning: /var/cache/yum/x86_64/7/kubernetes/packages/2b52e839216dfc620bd1429cdb87d08d00516eaa75597ad4491a9c1e7db3c392-kubectl-1.14.0-0.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID 3e1ba8d5: NOKEY ] 0.0 B/s | 7.6 MB --:--:-- ETA

Public key for 2b52e839216dfc620bd1429cdb87d08d00516eaa75597ad4491a9c1e7db3c392-kubectl-1.14.0-0.x86_64.rpm is not installed

2b52e839216dfc620bd1429cdb87d08d00516eaa75597ad4491a9c1e7db3c392-kubectl-1.14.0-0.x86_64.rpm | 9.5 MB 00:00:00

Retrieving key from https://packages.cloud.google.com/yum/doc/yum-key.gpg

Importing GPG key 0xA7317B0F:

Userid : "Google Cloud Packages Automatic Signing Key <gc-team@google.com>"

Fingerprint: d0bc 747f d8ca f711 7500 d6fa 3746 c208 a731 7b0f

From : https://packages.cloud.google.com/yum/doc/yum-key.gpg

Retrieving key from https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

Importing GPG key 0x3E1BA8D5:

Userid : "Google Cloud Packages RPM Signing Key <gc-team@google.com>"

Fingerprint: 3749 e1ba 95a8 6ce0 5454 6ed2 f09c 394c 3e1b a8d5

From : https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : kubectl-1.14.0-0.x86_64 1/1

Verifying : kubectl-1.14.0-0.x86_64 1/1

Installed:

kubectl.x86_64 0:1.14.0-0

Complete!

==================================================================================================================================

Copy K8S Master kube config to local

==================================================================================================================================

admin.conf 100% 5444 4.8MB/s 00:00

==================================================================================================================================

Check the cluster status

==================================================================================================================================

NAME STATUS ROLES AGE VERSION

k8s-master-az NotReady master 27s v1.14.0

==================================================================================================================================

Execute Ansible Playbook worker.yml

==================================================================================================================================

PLAY [master] *******************************************************************************************************************************************************************************************************************************

TASK [get join command] *********************************************************************************************************************************************************************************************************************

changed: [master]

TASK [set join command] *********************************************************************************************************************************************************************************************************************

ok: [master]

PLAY [workers] ******************************************************************************************************************************************************************************************************************************

TASK [Gathering Facts] **********************************************************************************************************************************************************************************************************************

ok: [worker1]

ok: [worker2]

TASK [join cluster] *************************************************************************************************************************************************************************************************************************

changed: [worker1]

changed: [worker2]

PLAY RECAP **********************************************************************************************************************************************************************************************************************************

master : ok=2 changed=1 unreachable=0 failed=0

worker1 : ok=2 changed=1 unreachable=0 failed=0

worker2 : ok=2 changed=1 unreachable=0 failed=0

==================================================================================================================================

Check the cluster status

==================================================================================================================================

NAME STATUS ROLES AGE VERSION

k8s-master-az Ready master 46s v1.14.0

k8s-worker01-az NotReady <none> 11s v1.14.0

k8s-worker02-az NotReady <none> 11s v1.14.0정상적으로 설치가 이루어 졌다면 최종 화면이 위와 같을 것이다.

STATUS는 잠시 후 다시 확인 해 보면 Ready로 업데이트 될 것이다.

[root@zero-vmc-az ansible-k8s-cluster]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-az Ready master 80s v1.14.0

k8s-worker01-az Ready <none> 45s v1.14.0

k8s-worker02-az Ready <none> 45s v1.14.0

[root@zero-vmc-az ansible-k8s-cluster]#

[root@zero-vmc-az ansible-k8s-cluster]#

[root@zero-vmc-az ansible-k8s-cluster]# kubectl version --short

Client Version: v1.14.0

Server Version: v1.14.0

[root@zero-vmc-az ansible-k8s-cluster]# kubectl cluster-info

Kubernetes master is running at https://10.0.4.4:6443

KubeDNS is running at https://10.0.4.4:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@zero-vmc-az ansible-k8s-cluster]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-7bzpl 1/1 Running 0 96s

kube-system coredns-fb8b8dccf-r5j5h 1/1 Running 0 96s

kube-system etcd-k8s-master-az 1/1 Running 0 48s

kube-system kube-apiserver-k8s-master-az 1/1 Running 0 32s

kube-system kube-controller-manager-k8s-master-az 1/1 Running 0 32s

kube-system kube-flannel-ds-amd64-42bzr 1/1 Running 0 80s

kube-system kube-flannel-ds-amd64-7z5cl 1/1 Running 0 95s

kube-system kube-flannel-ds-amd64-nxmfl 1/1 Running 0 80s

kube-system kube-proxy-8fghj 1/1 Running 0 80s

kube-system kube-proxy-kjn9v 1/1 Running 0 80s

kube-system kube-proxy-tb56r 1/1 Running 0 95s

kube-system kube-scheduler-k8s-master-az 1/1 Running 0 46s축하한다~!!!

드디어 최신 버전의 쿠버네티스가 정상적으로 설치된 것이다.

이제 원하는 만큼의 쿠버네티스 클러스터 노드를 마음 껏 구성할 수 있게 되었다~~~^^

내친김에 K8S 대시보드까지 띄어 보도록 하자.

4. K8S 대시보드 띄우기

[root@zero-vmc-az ansible-k8s-cluster]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-7bzpl 1/1 Running 0 2m10s

kube-system coredns-fb8b8dccf-r5j5h 1/1 Running 0 2m10s

kube-system etcd-k8s-master-az 1/1 Running 0 82s

kube-system kube-apiserver-k8s-master-az 1/1 Running 0 66s

kube-system kube-controller-manager-k8s-master-az 1/1 Running 0 66s

kube-system kube-flannel-ds-amd64-42bzr 1/1 Running 0 114s

kube-system kube-flannel-ds-amd64-7z5cl 1/1 Running 0 2m9s

kube-system kube-flannel-ds-amd64-nxmfl 1/1 Running 0 114s

kube-system kube-proxy-8fghj 1/1 Running 0 114s

kube-system kube-proxy-kjn9v 1/1 Running 0 114s

kube-system kube-proxy-tb56r 1/1 Running 0 2m9s

kube-system kube-scheduler-k8s-master-az 1/1 Running 0 80s

[root@zero-vmc-az ansible-k8s-cluster]# curl -O https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4577 100 4577 0 0 15707 0 --:--:-- --:--:-- --:--:-- 15674

[root@zero-vmc-az ansible-k8s-cluster]# kubectl create -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

[root@zero-vmc-az ansible-k8s-cluster]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-fb8b8dccf-7bzpl 1/1 Running 0 3m18s

kube-system coredns-fb8b8dccf-r5j5h 1/1 Running 0 3m18s

kube-system etcd-k8s-master-az 1/1 Running 0 2m30s

kube-system kube-apiserver-k8s-master-az 1/1 Running 0 2m14s

kube-system kube-controller-manager-k8s-master-az 1/1 Running 0 2m14s

kube-system kube-flannel-ds-amd64-42bzr 1/1 Running 0 3m2s

kube-system kube-flannel-ds-amd64-7z5cl 1/1 Running 0 3m17s

kube-system kube-flannel-ds-amd64-nxmfl 1/1 Running 0 3m2s

kube-system kube-proxy-8fghj 1/1 Running 0 3m2s

kube-system kube-proxy-kjn9v 1/1 Running 0 3m2s

kube-system kube-proxy-tb56r 1/1 Running 0 3m17s

kube-system kube-scheduler-k8s-master-az 1/1 Running 0 2m28s

kube-system kubernetes-dashboard-5f7b999d65-2nfrc 1/1 Running 0 7slocalhost에서 대시보드를 접속할 수 있도록 xrdp를 설치한다.

[root@zero-vmc-az ansible-k8s-cluster]#

[root@zero-vmc-az ansible-k8s-cluster]# rpm -Uvh http://mirror.centos.org/centos/7/extras/x86_64/Packages/epel-release-7-11.noarch.rpm

Retrieving http://mirror.centos.org/centos/7/extras/x86_64/Packages/epel-release-7-11.noarch.rpm

Preparing... ################################# [100%]

Updating / installing...

1:epel-release-7-11 ################################# [100%]

[root@zero-vmc-az ansible-k8s-cluster]# rpm -Uvh http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-1.el7.nux.noarch.rpm

Retrieving http://li.nux.ro/download/nux/dextop/el7/x86_64/nux-dextop-release-0-1.el7.nux.noarch.rpm

warning: /var/tmp/rpm-tmp.Y8CqOR: Header V4 RSA/SHA1 Signature, key ID 85c6cd8a: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:nux-dextop-release-0-1.el7.nux ################################# [100%]

[root@zero-vmc-az ansible-k8s-cluster]# yum update

[root@zero-vmc-az ansible-k8s-cluster]# yum groupinstall "GNOME Desktop" "Graphical Administration Tools"

Loaded plugins: fastestmirror, langpacks

There is no installed groups file.

Maybe run: yum groups mark convert (see man yum)

Loading mirror speeds from cached hostfile

<중략>

Complete!

Complete!

[root@zero-vmc-az ansible-k8s-cluster]# ln -sf /lib/systemd/system/runlevel5.target /etc/systemd/system/default.target

[root@zero-vmc-az ansible-k8s-cluster]# yum -y install xrdp tigervnc-server

Loaded plugins: fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* epel: mirror.premi.st

* nux-dextop: mirror.li.nux.ro

Resolving Dependencies

--> Running transaction check

---> Package tigervnc-server.x86_64 0:1.8.0-13.el7 will be installed

---> Package xrdp.x86_64 1:0.9.9-1.el7 will be installed

--> Processing Dependency: xrdp-selinux = 1:0.9.9-1.el7 for package: 1:xrdp-0.9.9-1.el7.x86_64

--> Processing Dependency: xorgxrdp for package: 1:xrdp-0.9.9-1.el7.x86_64

--> Running transaction check

---> Package xorgxrdp.x86_64 0:0.2.9-1.el7 will be installed

---> Package xrdp-selinux.x86_64 1:0.9.9-1.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved xrdp-selinux.x86_64 1:0.9.9-1.el7

Complete!

[root@zero-vmc-az ansible-k8s-cluster]# systemctl start xrdp.service

[root@zero-vmc-az ansible-k8s-cluster]# systemctl enable xrdp.service

Created symlink from /etc/systemd/system/multi-user.target.wants/xrdp.service to /usr/lib/systemd/system/xrdp.service.

[root@zero-vmc-az ansible-k8s-cluster]# netstat -anp | grep :3389

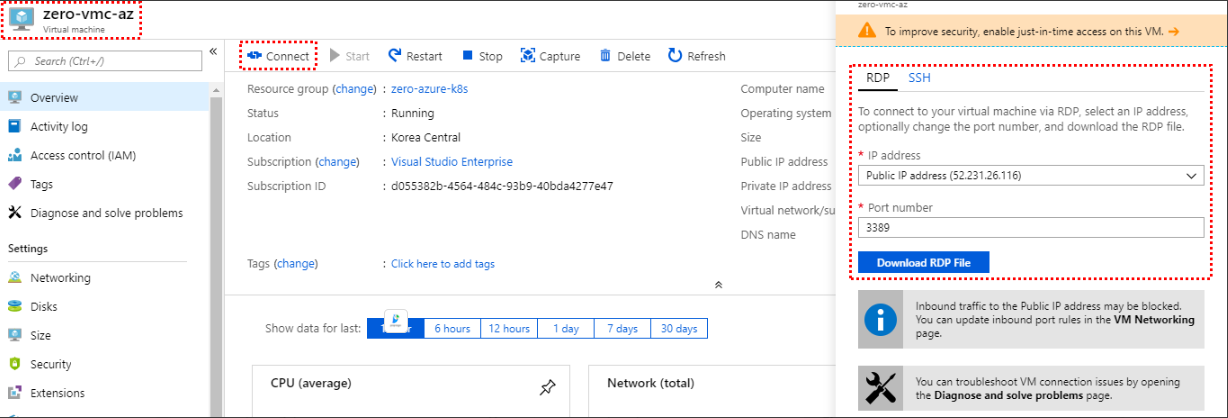

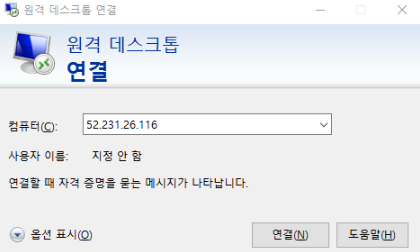

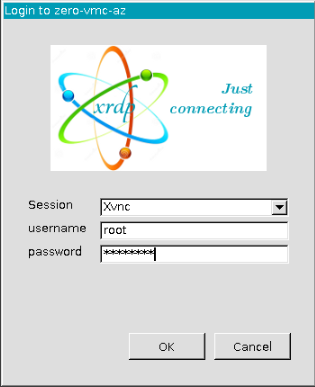

tcp 0 0 0.0.0.0:3389 0.0.0.0:* LISTEN 86922/xrdpAzure의 RDP 접속을 통해서 zerob-vmc-az에 접속한다.

또는 원격데스크톱 연결을 통한 접속도 가능하다.

터미널 창에 kubectl proxy를 입력하여 대시 보드에 액세스 할 수 있도록 해준다.

[root@zero-vmc-az ansible-k8s-cluster]# kubectl proxy

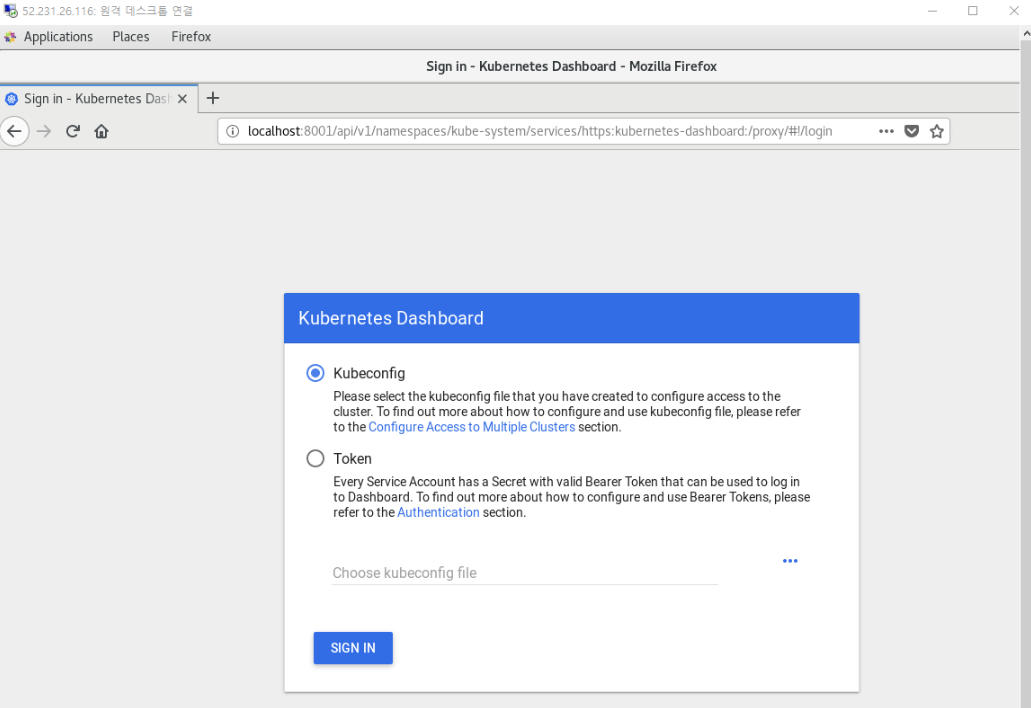

Starting to serve on 127.0.0.1:8001브라우저 창에서 다음을 입력한다.

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

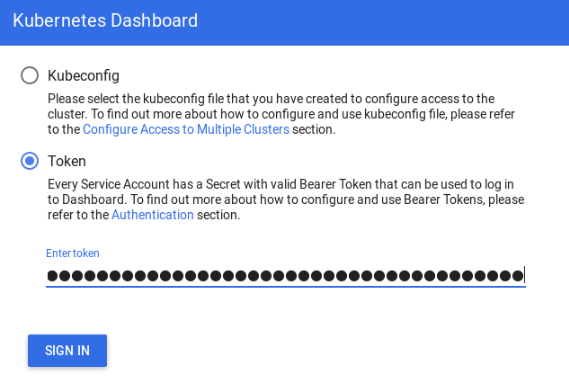

대시보드 접속을 위한 Token을 생성한다. (원격접속 터미널 상에서 시행)

[root@zero-gcp-vmc ~]# kubectl apply -f https://gist.githubusercontent.com/chukaofili/9e94d966e73566eba5abdca7ccb067e6/raw/0f17cd37d2932fb4c3a2e7f4434d08bc64432090/k8s-dashboard-admin-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

[root@zero-gcp-vmc ~]# kubectl get sa admin-user -n kube-system

NAME SECRETS AGE

admin-user 1 6s

[root@zero-gcp-vmc ~]# kubectl describe sa admin-user -n kube-system

Name: admin-user

Namespace: kube-system

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"ServiceAccount","metadata":{"annotations":{},"name":"admin-user","namespace":"kube-system"}}

Image pull secrets: <none>

Mountable secrets: admin-user-token-tsrxb

Tokens: admin-user-token-tsrxb

Events: <none>

[root@zero-gcp-vmc ~]# kubectl describe secret admin-user-token-tsrxb -n kube-system

Name: admin-user-token-tsrxb

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d6de1afd-5407-11e9-a883-42010a920008

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXRzcnhiIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkNmRlMWFmZC01NDA3LTExZTktYTg4My00MjAxMGE5MjAwMDgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.DCudHXmDCR6UAJUwLIHr77Fob9kA29xPn9mW9l-sZGiUlOywbOb-2OL2PDs01uGYd0AcvJCo2jlOpHlCYffPEXP7anS8wTfxEEQOsBbPsjCyhRuYFdKvtlzJwPcI4EtBU6OHBW07D9ApuwtetMfDes0PzaPTbYWiPC_drfqBHm0zZe39AhFQYdOw6LarvtTF-Y4kSbrdiJRgJqcRTMR7uyDTa131wokPMv3LOWfj8JrUEPUFRqVYYxpMRfH60H9P6uoyzN7Yh94tmg4hiv9CtATQ46WXDv3gj7dbwdu0HkoL8V2Dh_xv6T3UTKK_2wSR2PjVNMpu6Eti5tI_A1nOuA

ca.crt: 1025 bytes

namespace: 11 bytes

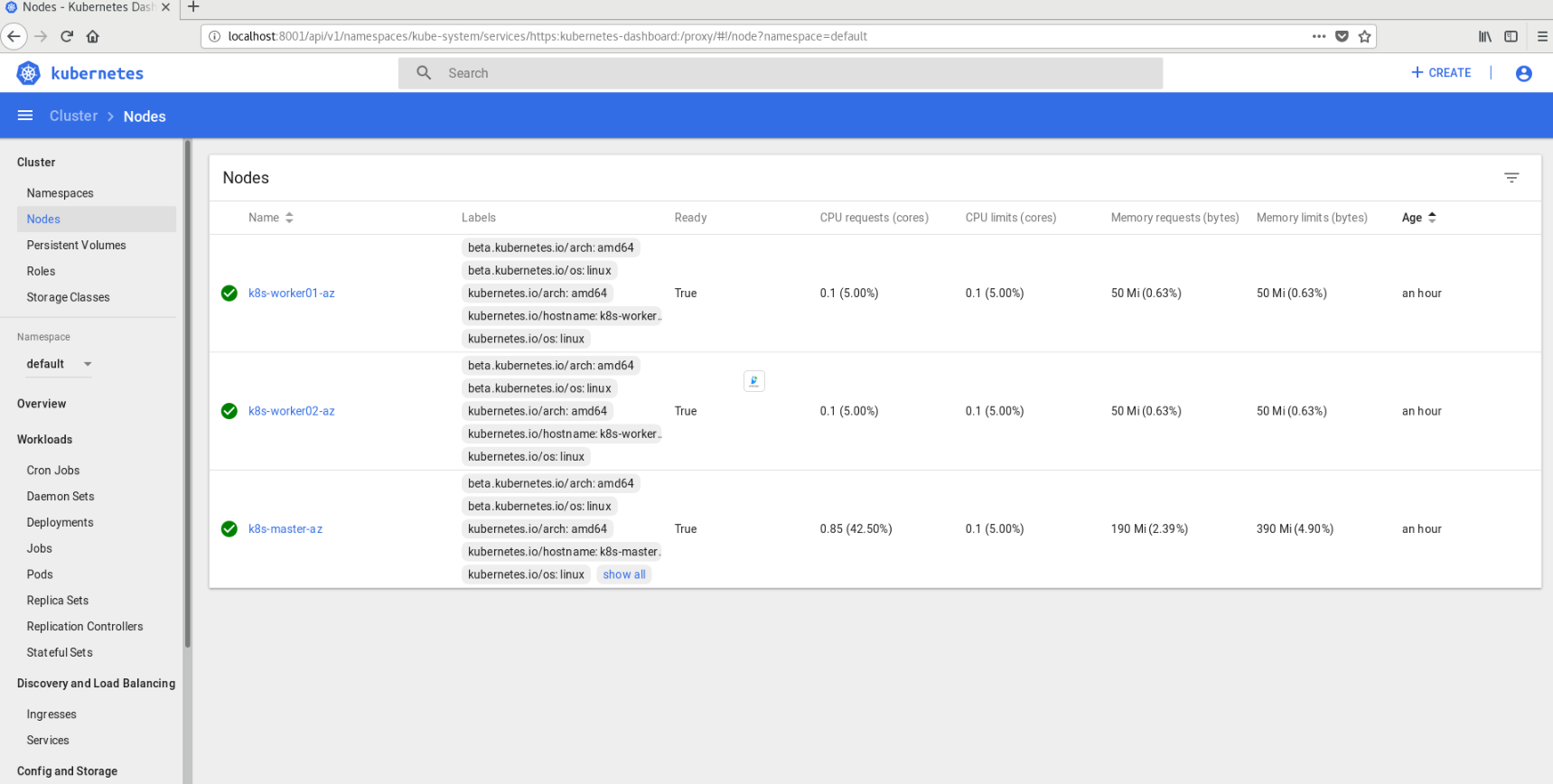

정상적으로 대시보드가 나타남을 확인한다.

축하한다~~!!!

이제 원하는 나만의 쿠버네티스 클러스터가 구성되었으니, 마음 껏 K8S를 가지고 놀아보자~~~!^^

'Kubernetes' 카테고리의 다른 글

| Vagrant를 사용하는 Containerd 런타임을 가지는 Kubernetes (1) | 2021.08.16 |

|---|---|

| 27 GCP와 Ansible을 활용한 쿠버네티스 클러스터 한방에 구성하기 2편 (3) | 2019.04.01 |

| 26 GCP와 Ansible을 활용한 쿠버네티스 클러스터 한방에 구성하기 1편 (0) | 2019.03.28 |

| 25 Kubernetes 201 (2) | 2018.09.27 |

| 24 Kubernetes 101 (0) | 2018.09.27 |